DEEP FAKE BIRDSONG, 2020

“Deep Fake Birdsong unites art with science to inquire about the electrical nature of lifeforms and to push the envelope of artificial intelligence. ”

A CURIOUS BIRD

Deep Fake Birdsong was inspired by my discovery of a simple analog electronic circuit that sings like a bird with remarkable fidelity and variety. “Breadbird #1,” 2019 combines five adjustable oscillators with one modified Hartley oscillator to generate birdlike sounds that are made by pure electronic vibration — no code or audio recording. In the following video, you can see the oscillators beneath the bird (they are color-coded with two LEDs apiece). Inside of the mylar bird sculpture is a modified Hartley oscillator that makes the vibrations audible through a low-cost 8 ohm speaker.

Breadbird #1, 2019. Analog electronic circuit for birdsong generation. 10” x 12” x 6”

Schematic for “Breadbird #1,” 2019. (Updated in 2020 to reflect minor changes)

WHAT KIND OF BIRD IS THIS CIRCUIT?

In Winter 2020, I approached Johann Diedrick with the following experimental question: what would one form of artificial intelligence think about another form? We used his software for birdsong identification (Diedrick’s Flights of Fancy, 2019) to analyze my circuit for birdsong generation (Heaton’s Breadbird #1, 2019).

We wanted to know what his bird identification software would say about the sounds produced by my electronic bird. Would the software think that the circuit is a real bird; and, if so, which species? Correlation with a real bird would be coincidental because I made no effort to engineer a specific species, only generic bird-like sounds. Our experiment was earnest, but also a sort of electronic Dadaism.

EXPERIMENTAL RESULTS

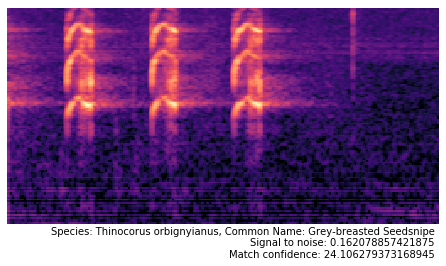

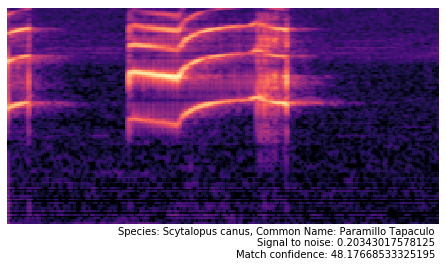

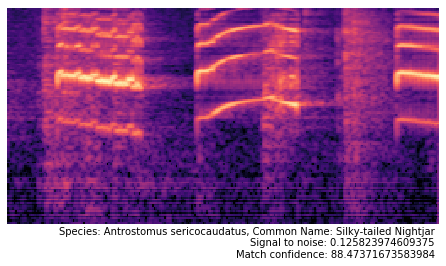

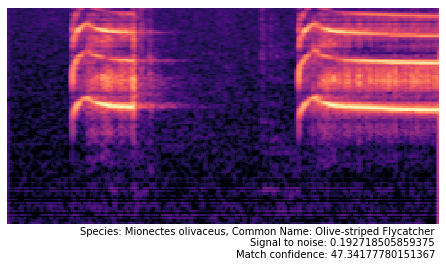

Based on a 2:02 minute audio recording of Heaton’s singing circuit, Diedrick generated 122 spectrograms that we ran through his Flights of Fancy model (trained against the BirdCLEF dataset). Out of 1,500 possibilities, Diedrick’s algorithm identified only four possible bird species with “match confidence” ratings from 19% to 96%. The four species of identified birds are as follows:

Grey-breasted Seedsnipe: 16 matches with an average percent confidence of 26.515

*Silky-tailed Nightjar: 47 matches with an average percent confidence of 93.147

Paramillo Tapaculo: 54 matches an average percent confidence of 48.991

Olive-striped Flycatcher: 5 matches with an average percent confidence of 47.938

*Notably, 47 of the 122 spectrograms were matched with the Silky-tailed nightjar (Antrostomus sericocaudatus) with an average percent confidence of 93%.

This is remarkably high fidelity for half of the analyzed spectrograms!

I asked an ornithologist friend for her opinion of the experimental results, and she said that Nightjars are pre-passarine birds with a relatively simple song structure, similar to the Breadbird #1 circuit we used for our experiment. This is how I came to name my first commercially available electronic songbird “Nightjar,” available through Adafruit starting in 2023.

technical details of Diedrick’s ai software

The possibility of both detecting and identifying birds in field recordings has been a long-standing problem in the field of bioacoustics and acoustic scene recognition [1, 2]. Only in recent years has it been possible to do large scale bird detection and recognition across large datasets of recordings, facilitated through the use of convolutional neural networks (CNNs) and other deep learning techniques [3]. Through the use of deep neural networks, we can scan through recordings, identify where bird calls are happening, and further, report back on what bird species produced that call. In Diedrick’s work Flights of Fancy, he was able to train a CNN through transfer learning to recognize birds found in the BirdCLEF dataset, which contains over 36,000 recordings across 1,500 species of birds primarily from South America [4. 5]. With this trained model, it is possible to take new recordings from birds that the model has never seen before, pass it through the software system, and produce a prediction as to what bird species the system thinks is contained within the recording (based on bird species from the dataset).

The Flights of Fancy software system was developed as follows: first, the BirdCLEF 2018 dataset was downloaded and sorted in such a way as to organize all of the audio recordings into folders named for each species. This way, we can use the folder name as the label for training, with all the recordings for a species contained within its species-named folder. Next, a data pre-processing step went through all of the recordings and segmented out bird calls through a signal-to-noise heuristic. This determination identifies signals with a significant amount of upward/downward variation as to be called a chirp. The system saves these segments as spectrograms images (frequency representation of a signal) in a folder named after each species like before in order to be used for training. Finally, the system uses a technique known as transfer learning [6] to take a specific kind of neural network (ResNet) already pre-trained on images (ImageNet), and leverages its pretrained weights to train more effectively on our generated spectrogram images of bird calls. From there, our model was trained down to a 27% error rate on predicting the species of a bird called based on spectrograms of bird species from that specific dataset.

References: [1] V. Lostanlen, J. Salamon, A. Farnsworth, S. Kelling, J.P. Bello. "BirdVox-full-night: a dataset and benchmark for avian flight call detection", Proc. IEEE ICASSP, 2018. [2] J. Salamon, J. P. Bello, A. Farnsworth, M. Robbins, S. Keen, H. Klinck, and S. Kelling. Towards the Automatic Classification of Avian Flight Calls for Bioacoustic Monitoring. PLoS One, 2016. [3] https://arxiv.org/pdf/1804.07177.pdf [4] https://github.com/aquietlife/flightsoffancy/blob/master/flightsoffancy.ipynb [5] https://www.aicrowd.com/challenges/lifeclef-2018-bird-monophone [6] https://docs.fast.ai/vision.learner.html#Transfer-learning

“The original question, ‘Can machines think?’ I believe to be too meaningless to deserve discussion.”